Engineering Syook InSite: Challenges While Building an IoT Product

- Aman Agarwal

- May 18, 2021

- 5 min read

Updated: Aug 20, 2021

As we gear up for the launch of the first version of our flagship IoT product, InSite, I thought this is the perfect time to look back at our journey and share learnings from the past year. This is a first in a series of blog posts from the engineering team at Syook.

The effort that goes into building an industrial IoT product is huge, with countless nights spent at the office, painstaking arguments, and discussions trying to get it right the first time instead of iterating and rebuilding frequently. It took us over a year to move from a POC to a product that has been accepted by big clients like Unilever and Delhivery (with a few more to be announced soon).

The team has grown from two to twenty people (and we’re looking to hire more, too). We moved from outsourced hardware to in-house R&D after a stint with a Chinese supplier along the way that helped us learn a lot about building robust IoT hardware.

The stack we are using is Javascript (NodeJS + ReactJS + React Native) with an additional service written in Java. For most of us (myself included), this was a first (building apps on JavaScript) and we had a lot to learn.

Here are a few challenges we faced while engineering InSite:

For those who might end up doing a TL;DR, here’s a quick summary:

NoSQL can be tricky. Refer to best practices before modeling your DB.

Memory Leaks are a nightmare and best avoided with a proper understanding of the processing environment

Use NodeJS child processes to mitigate loop delay issues and avoid processing data on the main thread

IoT Gateways need to be built for robustness.

Hire specialists who have proper domain knowledge.

Engage with consultants before you are ready for in-house capabilities

Build dynamic, configurable features that allow the users to configure and build what they want instead of you hard coding everything

The details for those of you who’ve stuck around to read more:

Challenges with NoSQL Database: We wanted the flexibility of a NoSQL database, and Mongo DB ranks pretty high so it was our first choice. We did some schema modeling with Mongoose, but, being first-timers, we ended up making a few glaring modeling mistakes that caused memory bloats. After resolving these issues over a few months, we can say with confidence that NoSQL data is not at all straightforward, and one should definitely refer to the best practices and guidelines for db modeling. Here are a few good references that clarified a lot of concepts for us.

Memory leaks: A big lesson for us was troubleshooting memory bloats and memory leaks (self-inflicted due to lack of db modeling knowledge) in Node. Our first version would run for five minutes and then crash due to memory leaks. Why? We had to play Sherlock for about a month or so to figure out the root cause (still need to sharpen my deductive reasoning, I guess). There are a lot of articles about how to go about finding memory leaks but most of it is not straightforward. Heap dumps and flame graph analysis, manual garbage collection tweaking, and a few other techniques were used to figure out that the core issue lay in how we had modeled the db in the first place (we were loading a lot of data into the RAM and not releasing it properly; given that IoT data was being processed every 2 seconds, the bloat was very fast and the app was crashing within 5 minutes).

Loop delay issues: Node is notorious for handling requests on the main thread and, like every other first-timer, we, too, ended up facing issues with the event loop. Since this was our first application and we had to handle all the real-time data processing in Node (still up for debate if we need to switch it to something faster like Go), we ended up iterating a lot to perfect the processing of all the real-time data that is being generated by the IoT sensors. How did we mitigate the issues with loop delay? Child processes to the rescue!

Physical gateway vs virtual gateway: Our first version of the hardware relied on a physical gateway that collected data from all the other readers and then sent it to the main server for processing. This has certain advantages (minimizes bandwidth utilization by optimizing processing using edge computing) while it also meant that the gateway was the single point of failure in case anything goes wrong. Also, a single gateway imposed limitations on the number of sensors we could deploy since the bandwidth of any physical device will have limitations. We switched this physical gateway with a virtual gateway with each reader sending data to the cloud directly where it is processed.

Reader characterization issues: Any hardware that sends data needs to be characterized for its behavior so that the application response is predictable. This was another major learning for us. Given our oilfield background, we knew how to go about building applications that rely on data from hardware but the characterization of hardware was a total googly we had to handle. We ended up hiring hardware specialists for this particular task and the results have been amazing.

Design iterations: We didn’t have in-house product design expertise and for the POC we ended up creating a barebones UI which was effective enough to demonstrate the potential of what we were trying to achieve. Given that the POC passed the validation from our first client we knew we had a lot of work to do to revamp the UI/UX to make it more user-friendly. Enter Tinkerform, our design consultants! They are a bunch of IIT-G pass outs who are working with startups, helping them conceptualize the product by building user stories and then delivering the workflows and the final UI. Over the past year, our engagement with them has been highly productive and we are continuing to build with them.

Metrics: Dashboards are the heart of any B2B application and graphs are an integral way of data visualization. We started out with basic graphs that we thought could be useful but soon realized that it was better to give the users the option to visualize data in any manner they wanted. To this end, we built a configurable metrics module where users can add and configure any kind of widgets, helping them visualize data in their preferred format.

Dynamic rules engine: One of the most important aspects of InSite is the dynamic rules engine that can send alerts whenever some rule is broken. Just like everything else we didn’t have much clarity on how we were going to build this but a big whoop to the devs who cracked it. It is inspired by IFTTT-like workflow and setting up rules is now pretty straightforward. Looking ahead we aim to make this engine more powerful and add more complexity to the kind of rules that can be set.

I would like to mention a very special thanks to all the people who advised and helped us along this journey while we were facing all these challenges. It has been a roller coaster of a ride and wouldn’t have been possible without their help. Also a big whoop to the open-source community and all the people who contribute to building such fantastic libraries which we end up using. We encourage our devs to make open source contributions since we know how heavily we end up relying on this community for help and support.

And, to finish, a glimpse into what all are we building:

We are exploring some ML / AI for improving the accuracy of our positioning engine

We are also working on building a more robust and accurate business intelligence and analytics engine that will help our clients gain actionable insights

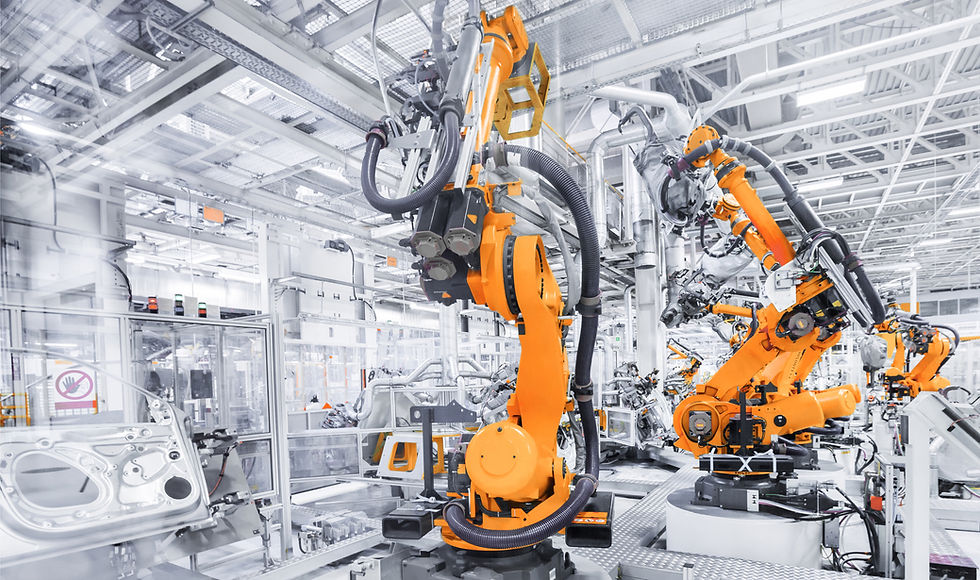

We are looking to expand our IoT offering to include a host of sensors that are essential for a smart factory (IIoT 4.0)

And we will definitely be focusing on performance enhancements as we scale along the way

That’s all for now, folks! Till the next post…

Comments